Adaptive on-line performance evaluation of video

trackers

[Description][Related publication][Dataset][Sample results][Software][Additional references]

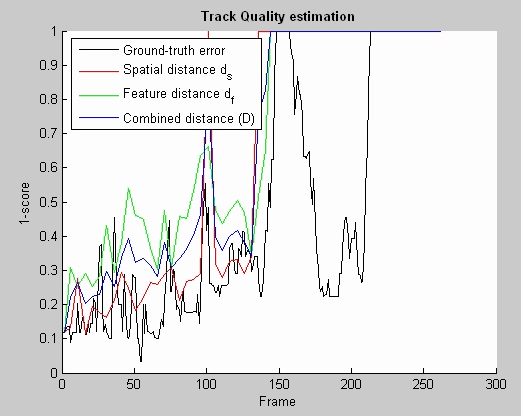

This page presents

sample results of our framework to evaluate the performance of tracking

algorithm without using ground-truth data (named ARTE, Adaptive Reverse

Tracking Evaluation). The framework is divided into two main stages,

namely the estimation of the tracker condition to identify intervals during

which a target is lost and the measurement of the quality of the estimated

track when the tracker is successful. A key novelty of the proposed framework

is the capability of evaluating video trackers with multiple failures and

recoveries over long sequences. Successful tracking is identified by analyzing

the uncertainty of the tracker, whereas track recovery from errors is

determined based on the time-reversibility constraint.

Original test sequences with ground truth data and a

MATLAB implementation are also provided.

Adaptive on-line performance evaluation of video

trackers [link]

J. SanMiguel, A. Cavallaro

and J. Martínez

IEEE Transactions on Image Processing , 21(5): 2812-2823, May

2012

Contact Information

Juan C. SanMiguel - show

email

CAVIAR dataset: targets P1 (Browse_WhileWaiting1), P2

(OneLeaveShopReenter1front), P3 (OneLeaveShopReenter2front), P4

(ThreePastShop2cor) are available here

PETS2001 dataset: targets P5-P10 (Camera1_testing) are

available here

PETS2010 dataset: targets P11-P14 (S2_L1_view001), P15-P16 (S2_L2_view0001)

and P17-P18 (S2_L3_view001) are available here

Targets F1 (seq_bb), F2 (seq_mb),

F3 (seq_sb) and F4 (seq_villains2) are available here

VISOR dataset: targets F5 (visor_video_1) and F6 (visor_video_2) are

available here

The ground-truth for all the targets in the dataset is

available here

Download the videos1 and images from the links

below to look at the sample experimental results of our proposed framework. A

color-based particle filter was used to generate the tracking results [1].

Additionally, a comparison with representative state-of-the-art approaches

for empirical standalone quality evaluation (Observation Likelihood (OL) [2],

covariance of the target state (SU) [3], frame-by-frame reverse-tracking

evaluation using template inverse matching (TIM) [4] and full-length

reverse-tracking evaluation using the same applied tracking algorithm (FBF)

[5]) is included.

1The XviD ISO MPEG-4 codec is needed to watch the video files

(download it here)

The software listed in this section can be freely used

for research purposes.

Two separate tools are provided for the main stages

described in the paper (also available in GitHub):

- Tracker condition estimator (MATLAB implementation)

- Track quality estimator ( MATLAB implementation)

Additionally, the implementation of the particle

filter tracker and data needed for the above tools are provided:

- The MATLAB implementation of the color-based

particle filter tracker used to generate the tracking data is available here*

- Data (sequence, ground-truth and tracking data)

*If you use this software, please

cite the related reference.

References

[1] K. Nummiaro, E. Koller-Meier, and E. Van Gool,

“An adaptive colour-based particle filter,” in Image

and Vision Computing, 21(1):99–110, 2003.

[2] N. Vaswani, “Additive change detection in

nonlinear systems with unknown change parameters,” in IEEE Trans. on Signal

Processing, 55(3):859–872, 2007.

[3] E. Maggio, F. Smerladi,

and A. Cavallaro, “Adaptive multifeature

tracking in a particle filtering framework„” in IEEE Trans. on Circuits and

Systems for Video Technology, 17(10):1348–1359, 2007.

[4] R. Liu, S. Li, X. Yuan, and R. He,

“Online determination of track loss using template inverse matching,” in Proc.

of the Int. Workshop on Visual Surveillance, Marseille (France), 17 October

2008.

[5]H. Wu, A. Sankaranarayanan,

and R. Chellappa, “Online empirical evaluation of

tracking algorithms,” in IEEE Trans. on Pattern Analysis and Machine

Intelligence, 32(8):1443–1458, 2010.