UnSyn-MF Dataset: Unified Synthetic Multiple Field Of View

UnSyn-MF is a synthetic dataset meticulously crafted with a sampling rate of 10fps to provide scenes captured by cameras with varying Field of View (FOV) values, offering high utility across numerous research domains. Specifically, this dataset has been tailored to support the research conducted in the paper titled "Self-Supervised Monocular Depth Estimation on Unseen Synthetic Cameras" (CIARP 2023), where the main objetive is to mitigate the dependance that self-supervised learning models have to camera intrinsic parameters in the task of monocular depth estimation. The mentioned study demanded a dataset in which identical sequences are available from cameras with distinct intrinsic parameters. Given the absence of an available dataset meeting this criteria, we have taken the initiative to create a publicly accessible dataset. UnSyn-MF comprises both RGB and depth images, all generated using the CARLA simulator through Unreal Engine 4.

The dataset contains 6,000 images strategically organized for model training that are distributed across three distinct video sequences, each recorded using five different cameras with FOV values of 40, 60, 80, 100, and 120. Consequently, this dataset comprises an extensive training set of 30,000 images. Additionally, two separate sequences have been prepared for model evaluation, each containing 1,200 images. These sequences have also been captured by the same five distinct cameras, yielding a test set of 12,000 images.

Dataset Description

The following table provides an overview of the dataset's structure, consisting of five sequences totaling 8,400 images. Each sequence was also captured using five distinct cameras, resulting in a dataset comprising 42,000 images. Sequences 1, 2, and 3 are designed for model training; while sequences 4 and 5 for evaluation purposes.

| Sequence | # Images | Image's Size |

|---|---|---|

| 1 | 2000 | 1980 x 1080 |

| 2 | 2000 | 1980 x 1080 |

| 3 | 2000 | 1980 x 1080 |

| 4 | 1200 | 1980 x 1080 |

| 5 | 1200 | 1980 x 1080 |

| Camera ID | FOV |

|---|---|

| 1 | 40 |

| 2 | 60 |

| 3 | 80 |

| 4 | 100 |

| 5 | 120 |

Visual Examples

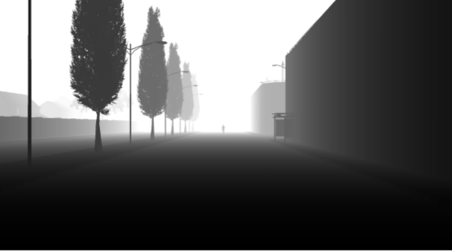

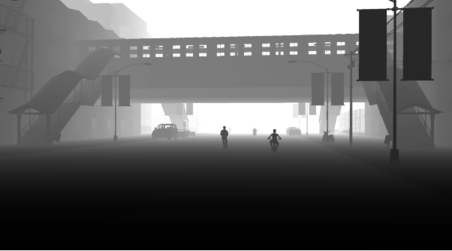

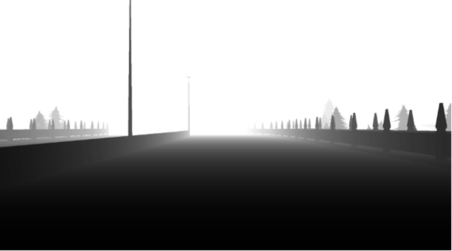

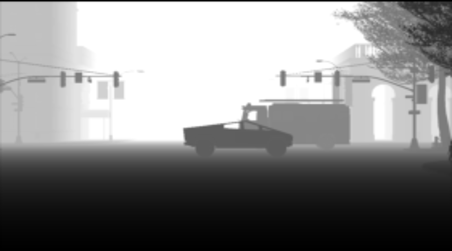

We present a figure that visually illustrates examples from UnSyn-MF. In this graphical representation, we have arranged five rows, each corresponding to one of the five distinct sequences generated. The left column displays randomly selected RGB images from each sequence, while the right column showcases the corresponding depth maps associated to the RGB images. These examples provide a preview of the diversity of scenes and data that comprise our dataset.

Sequence 1 - RGB

Sequence 1 - Depth Map

Sequence 2 - RGB

Sequence 2 - Depth Map

Sequence 3 - RGB

Sequence 3 - Depth Map

Sequence 4 - RGB

Sequence 4 - Depth Map

Sequence 5 - RGB

Sequence 5 - Depth Map

It's essential to emphasize the disparity in complexity between both evaluation sequences. As depicted in the figure above, Sequence 5 is situated in an urban setting with a substantial presence of buildings and traffic elements. In contrast, Sequence 4 unfolds in a more straightforward rural environment with fewer visual elements. Researchers and users of this dataset should consider this distinction when conducting studies and experiments.

Different Cameras

The figure below provides a visual exploration of the FOV impact by presenting identical scenes captured from cameras with varying values for this parameter. The images in the left column correspond to the same scene, each taken with FOV values of 40, 60, 80, 100, and 120, respectively. Meanwhile, the right column displays the corresponding depth maps for each of them. This illustration serves as an insightful demonstration of how FOV influences the perception of the same scene, offering valuable insights for researchers in the field.

Camera ID 1 - FOV: 40

Camera ID 2 - FOV: 60

Camera ID 3 - FOV: 80

Camera ID 4 - FOV: 100

Camera ID 5 - FOV: 120

Conclusion

UnSyn-MF is a synthetic dataset that represents a potentially valuable resource for the research community. With its carefully structured sequences, diverse camera FOV values, and distinct urban and rural evaluation scenarios, this dataset is versatile for exploring various computer vision challenges. Whether it's for training and evaluating models or investigating the effects of different Field of View settings, UnSyn-MF provides a rich dataset that encourages innovative research and experimentation.

Download links

Sequence 1 of UnSyn-MF dataset can be downloaded in this link (32.1GB).

Sequence 2 of UnSyn-MF dataset can be downloaded in this link (38.8GB).

Sequence 3 of UnSyn-MF dataset can be downloaded in this link (34.7GB).

Sequence 4 of UnSyn-MF dataset can be downloaded in this link (19.7GB).

Sequence 5 of UnSyn-MF dataset can be downloaded in this link (24.8GB).

Related Publication: Cecilia Diana, Juan Ignacio Bravo, Javier Montalvo, Álvaro García and Jesús Bescós. Self-Supervised Monocular Depth Estimation on Unseen Synthetic Cameras. Iberoamerican Congress on Pattern Recognition, (CIARP 2023).

Acknowledgement: This work is part of the HVD (PID2021-125051OB-I00), IND2020/TIC-17515 and SEGA-CV (TED2021-131643A-I00) projects, funded by the Ministerio de Ciencia e Innovacion of the Spanish Government.